AI adoption in the workplace is growing rapidly. HR professionals, finance teams and business leaders are increasingly using AI to speed up their processes and make more informed decisions. As AI becomes more integrated into our daily work, regulation is not far behind. The EU AI Act, implemented in 2024, will be gradually fully enforced across European Union member states by 2027. The new AI Act aims to promote transparency, ensure AI systems are used safely and minimize risks for users. In this guide, we’ll explain what this new AI regulation means for companies in Europe, the UK, and beyond. Since the EU AI Act may potentially apply to your business even if it’s based outside the EU. By understanding the EU AI Act early, you can reduce compliance risks and foster a company culture built on transparency.

Key Takeaways:

- The EU AI Act is the first comprehensive EU measure to regulate AI.

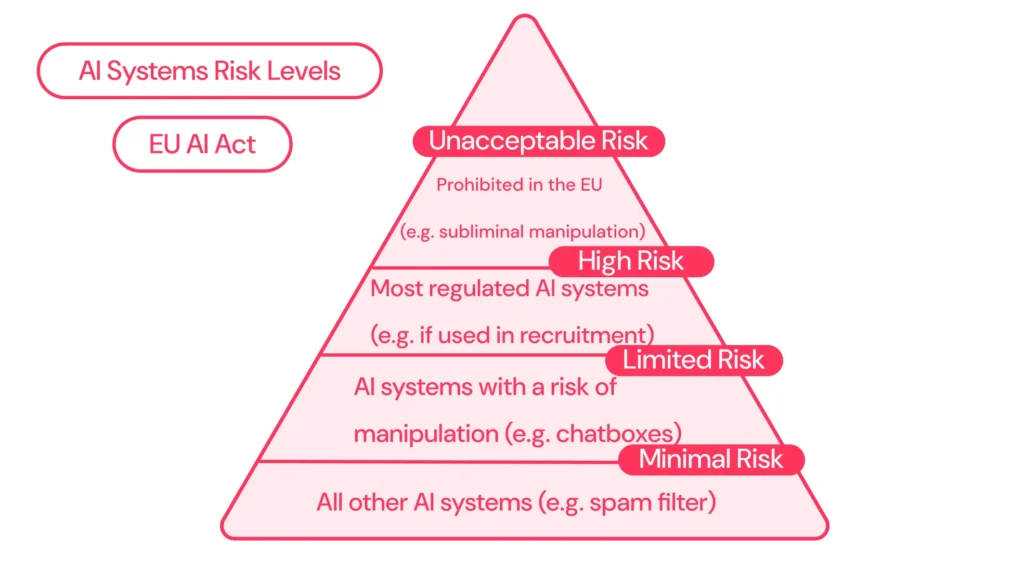

- According to the law, AI systems will be regulated with varying degrees of strictness depending on the potential risk to users and society.

- All AI applications must be traceable, transparent and designed to prevent the creation of illegal content.

- UK employers working with EU employees, clients, or vendors may be required to prepare their businesses to meet compliance.

Why the EU AI Act Matters for the UK & Global Businesses

In March of 2023, the UK’s Department for Science, Innovation, and Technology published a white paper title ‘A Pro-Innovation Approach to AI Regulation’. This framework outlines guiding principles to follow, including safety, transparency, fairness and accountability. However, these principles do not impose specific compliance regulations on businesses. UK companies are not legally required to adhere to these guidelines, nor will they be penalised for not following these main principles.

On the other hand, the EU AI Act sets a clear precedent. It is likely that the UK, along with other global countries, will follow suit shortly by developing its own set of AI regulations. This new AI governance in HR means employers, CEOs and business leaders will have to learn how to mitigate compliance risk when it comes to using AI in the workplace. Global businesses that are proactive and implement ethical and safe AI systems will be better prepared when rules on artificial intelligence begin to roll out in their region.

What’s the difference between the UK and EU’s approach to AI Regulation?

The UK’s AI guidelines are exactly that, guidelines. They give employers flexibility with no legal enforcement when it comes to safe AI implementation. Whereas the EU AI Act defines strict and specific regulations that will be enforced, and if businesses are not compliant, they will face heavy penalties. Additionally, the EU AI Act applies to companies outside of the EU if they offer, distribute, or operate AI systems within the EU. This can greatly impact UK employers who have employees, offices, or businesses in any EU member state.

What is the EU AI Act?

The EU AI Act applies a set of rules to be adopted by the European member states with the intent to regulate artificial intelligence (AI). It aims to protect fundamental rights while giving space to science and AI innovation in research and industry. This is intended to strengthen trust in AI systems.

The law follows a so-called risk-based approach, which means that AI systems are regulated with varying degrees of strictness depending on their potential risk to users and society.

The official EU AI Act description can be found here:

- AI Act EU PDF (English)

Who will be affected by the EU AI Act?

The EU AI Act imposes obligations on companies that provide AI applications. Article 3 of the AI Regulation clearly defines who the law is aimed at:

- Provider : Natural or legal persons, public authorities, or other bodies that develop or commission the development of a general-purpose AI system or model and place it on the market or put it into service under their own name or trademark. This is irrespective of whether this is done for a fee or free of charge.

- Operators : Those actors who use an AI system on their own responsibility in a professional or business context.

- Authorised representatives : Actors who have been authorised in writing by the provider to fulfil certain obligations under the AI Regulation on its behalf.

- Importer : natural or legal persons established in the EU who place an AI system bearing the name or trademark of a person established outside the EU on the Union market.

- Distributor : Natural or legal persons in the supply chain who place an AI system on the EU market, other than suppliers or importers.

The main idea of the regulation, which must be followed and implemented by the aforementioned actors, is that AI systems can pose risks to fundamental rights . Therefore, AI models are classified according to the risk they pose to fundamental rights. The higher the risk, the stricter the requirements actors must comply with —for example, regarding transparency, documentation, or oversight.

EU AI Act Summary

Here is a summary of the key points of the EU AI Act:

Risk-based classification of AI systems

The EU classifies AI systems according to the risk they pose to people and society. This means that the higher the risk, the stricter the requirements for transparency, security, and monitoring.

Prohibition of applications with unacceptable risk:

Certain applications that manipulate or discriminate against people are prohibited. These include, for example:

- Voice-controlled toys that encourage dangerous behavior in children

- Social scoring systems that classify people based on certain characteristics (e.g., social behavior or socioeconomic status)

- Biometric real-time remote identification in public spaces, such as facial recognition

According to the EU AI Act, these applications are considered an unacceptable risk to people and society.

High-risk AI systems:

AI systems that pose a very high risk are permitted under certain conditions, such as testing, monitoring, and certification . They are divided into two main categories:

- AI systems in products (e.g. medical devices, vehicles, toys)

- AI systems in certain areas of society (e.g., critical infrastructure, education, law enforcement)

All high-risk AI systems will be assessed throughout their life cycle. In addition, EU citizens will have the opportunity to file complaints about AI systems .

- Other risk classes are:

- Limited risk: A chatbot that provides customers with general product information. It doesn’t provide safety-critical information, but users need to be aware that the answers are AI-generated.

- Minimal risk: An AI application for spelling and grammar checking in text documents. It supports users but poses virtually no risk to health, safety, or fundamental rights.

Transparency requirements

Transparency requirements apply to all AI systems and applications . For example, generative foundation models like ChatGPT are not classified as high-risk, but must still comply with transparency obligations and EU copyright law .

That means:

- Disclosure: It must be clearly stated that content was created using AI.

- Avoiding illegal content: AI models must be designed in such a way that no illegal content can be generated.

- Copyright Summary: When using training data, summaries of copyrighted content must be created and disclosed.

National authorities should also provide test environments in which AI models can be tested in a controlled manner before they are widely deployed.

EU AI Act Timeline

Not all provisions of the AI Regulation will be implemented immediately. They are staggered and also differ between companies deploying new AI and those with existing solutions. The current given timeline is as follows:

- July 12, 2024: Publication of the AI Regulation (EU AI Act) in the Official Journal of the EU. The law is now official and valid.

- August 2, 2024: The regulation enters into force and a 24-month transition period begins.

- February 2, 2025:

- Ban on AI systems with unacceptable risk (e.g. social scoring).

- Start of mandatory AI literacy

- August 2, 2025:

- Entry into force of central governance rules and obligations for providers of GPAI models (general-purpose AI such as ChatGPT).

- August 2, 2026:

- Most of the obligations for high-risk AI systems come into force. These systems are listed in Annex III of the regulation, for example, AI in human resources or in the management of critical infrastructure.

- Grandfathering: High-risk AI systems put into operation before this date do not have to implement the new rules immediately, as long as no significant changes are made. As soon as a significant change occurs, the grandfathering expires.

- August 2, 2027:

- Full application of the law for certain high-risk AI systems listed in Annex I that are used as safety components in products (e.g. medical devices).

- The grandfathering will also end for GPAI models that came onto the market before August 2, 2025.

- December 31, 2030:

- Longer transition period for certain large public authority IT systems.

The competent authorities and regulations on sanctions and fines still need to be implemented into national law.

Impact of AI regulation on Employers

As mentioned, the EU AI Act imposes regulations on companies that develop, operate, or distribute AI applications within the EU. For companies, this means:

- Risk assessment: You must assess which risk category your AI systems fall into (unacceptable risk, high risk, limited risk, minimal risk). This applies to all AI model operators.

- Compliance obligations: Depending on the risk category, requirements such as transparency, technical documentation, certification, and reporting of serious incidents must be met. Operators of limited-risk AI systems, for example, only need to indicate that they are AI systems.

- Training: Employees must be trained in the safe and legally compliant use of AI in accordance with AI Literacy. However, this only applies to operators of high-risk systems.

- Monitoring & Documentation: High-risk AI systems must be monitored, documented, and evaluated throughout their entire lifecycle.

What HR Leaders Need to Consider

The impact AI has on HR teams is undeniable, particularly for small teams or one-person departments. Even more, AI tools used during the recruitment process has saved teams time, money and from creating job descriptions, centralising candidate information and sorting through CVs. Such systems would have to meet AI compliance regulations by 2027. Your HR team will have to audit their AI recruitment software to ensure they comply with transparency requirements. Moreover, HR tech and other advanced digital tools used in the EU will need to prove these tools are fair, transparent and safe. Additionally, HR leaders will need to prepare their team for AI literacy training. This protects users from falling victim to malicious AI threats or spam.

Why CEOs Should Pay Attention

Business leaders and CEOs will need to find a balance between innovation and compliance. When it comes to artificial intelligence, all systems used, created, or distributed within the EU will need to comply with these new regulations by 2027. It’s essential to stay informed and communicate any changes or adapt your processes accordingly to avoid incurring costly penalties. Leaders who are proactive and implement policies to ensure compliance will be setting up their company for success in the long run. Whether you operate in the EU or not, CEOs and leaders must take action now as additional regulations across the globe are inevitable.

Compliance Cost of Finance Teams

Finance teams should be aware of the costly risk their company could face due to non-compliance with the EU AI Act. Being informed of AI regulations can help the financial teams allocate budget for audits, compliance programs, and staff training. Through careful preparation, organizations can lessen the financial risk of fines. If you’re a financial leader, start working with your HR and legal teams to strategically plan and mitigate financial risk.

How Factorial AI Supports Compliance

Many companies now use various AI applications in their daily work. The responsibility for compliance with legal requirements lies not with the companies themselves, but with the developers of the systems.

With Factorial AI, companies gain a solution that truly understands their structures, teams, and goals. Instead of wasting valuable time on manual processes, tasks can be completed up to three times faster. The result: clear, data-driven insights that enable growth. This allows you to increase your impact while reducing costs – a real support in your everyday work.

- Performance management platform: Live transcription of 1:1 meetings, structured recaps with context (pulse signals, previous notes, KPI changes) and automatic creation of an editable action plan

- ATS Recruitment: Leverage AI to write your job descriptions, match you to the top candidates, provide CV summaries and accelerate the recruiting process

- Rota software: Automated shift planning supported by AI to create and quickly publish schedules for your whole team

- Finances: Mobile receipt scanning, AI OCR data capture, automatic policy and cost center assignment, reminders for timely submission to gain tighter control of your expenses

- Analytics/Reports: Instant answers from real-time data, automatic reporting, precise analytics without Excel or analysts

With Factorial’s AI business software, teams benefit from the advantages of AI without taking legal risks and can be confident that all features are implemented in compliance with EU regulations.

FAQs about the EU AI Act: UK Employers Edition

Why should UK businesses be concerned about EU legislation?

Yes, UK businesses that have local entities in the EU, employees who are EU residents, recruit employees from EU countries, or use AI systems that affect EU nationals/residents, then the EU AI Act will apply to you.

We don’t use AI systems, should we still be concerned?

Yes, the EU AI Act regulates all AI systems, including ChatGPT and Copilot (common general-purpose AI models). It’s recommended to double-check what systems your organisation is using or looking into, as there’s a chance you will be affected.

Does the EU AI Act apply to the UK?

Yes and no. The EU AI Act applies to UK companies that develop, deploy, or use AI systems within the EU. If any of those situations apply to you as a UK employer, then yes, you will be affected by the EU AI Act. However, if none of those situations apply to you, then you will not be obligated to comply with AI regulations stated in the EU AI Act.

When was the EU AI Act passed?

The EU AI Act officially came into place on August 1, 2024. The legislation and its entirety will be fully implemented over the upcoming years and will be fully applicable by August 1, 2027.